Survey System and Its Performance

Our client is an online survey platform provider with thousands of users, mostly from the EU countries. The platform enables distributing regular surveys, as well as case management, 360, and e-assessment surveys. Each survey type is supported by a unique workflow, the last step of which is delivering a powerful online analysis tool that extracts the necessary data and presents it in a user-friendly way.

Platform users create surveys on a daily basis. As a result, most surveys are usually sent to a huge number of recipients. Therefore, these systems experience heavy load when many people open their personal links and start responding at the same time. To tackle this issue, our client needed to determine possible bottlenecks and find a solution to maintain the scalability of the platform.

Any survey being sent to a recipient is backed by around 50 databases. Even though a survey can be divided into a few pages, one is unable to obtain only a part of the relevant information from a database. We need the entire survey model in each request to support the logical rules, question randomisation, validation, etc.

A significant part of these tables is made up of survey configuration, various display modes, and logical settings. These features are configured by survey owners during the creation stage and edited quite rarely afterwards. Thus, we decided to extract such tables into a separate domain and put them into an external Memcached-based storage to avoid multiple SQL queries per recipient, per request.

We also implemented an additional step that handles full survey model caching, retrieval from the cache, and proper invalidation. As the code base was already complete and full of existing features, we extracted the appropriate interfaces and made two implementations: one for NHibernate models, and another for the so-called Cached viewmodels. Such an approach guarantees substituting cached viewmodels with NHibernate models, and vice versa.

In background caching, the logic performs the following:

- Attempting to find a survey in the cache.

- If a survey is found, determining whether it is valid or not. This is done by tracking the survey’s ‘last edit date’ and comparing it to the ‘date put in cache’.

- If the survey is valid, proceeding to the next steps.

- If the survey is outdated, recaching it one more time and using it for the current request.

As mentioned before, a cached survey works in totally the same way as a direct database survey; therefore, the system can switch between these modes through a simple configuration process. Such switching is very helpful when one needs to fall back on direct database calls.

The suggested approach significantly reduced the response time for each recipient. Caching saved a lot of SQL queries and improved the overall health of the system, especially at the database level. The achieved level of scalability enables easy handling of current spikes with no impact to any user.

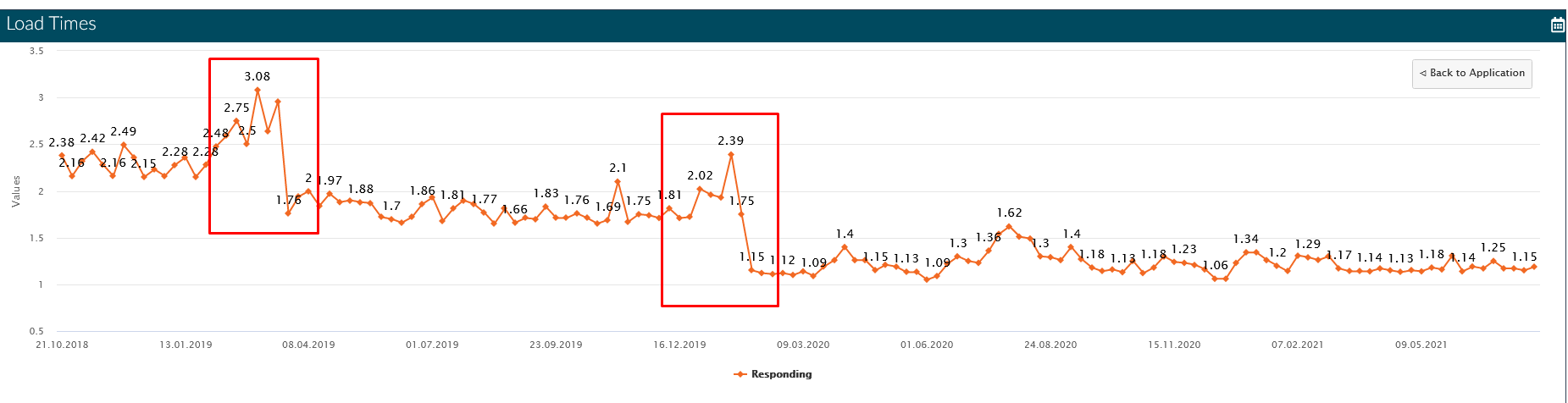

The chart below shows an average time for the survey participation page to load, in seconds, with the metrics received on a weekly basis.

The initial implementation of caching was rolled out to production in the spring of 2019, which saw average page load times drop from 2.4 seconds to 1.8 seconds (a 25% reduction). In early 2020, we rolled out an update, version 2 of caching with a few bug fixes, which reduced page load times from 1.8 seconds to 1.2 seconds (another 30% reduction). Thus, in total, we managed to reduce the page load time by almost 50%.

Related Cases

Read allRTSM Solution: Data Ingestion Improvement

Removing issues in data architecture and processing in order to provide a solid foundation for future growth of the platform.

LMS Content Import and Export Feature

A solution for importing and exporting content from / to Moodle and IMSCC platforms.

Content Generation with Copilot Studio and MCP Servers

A solution to help new teachers rapidly adapt to the educational system while providing easy access to the existing content base.